ROTC Cadets meet User-Centered Design

ROTC Cadets (students training for military roles) are impaired by situational visual impairment during ground movements at night. That's fancy for they can't move quickly at night because they can't see. We set about to change that through User-Centered Design methods.

Problem

Moving from point A to B is more difficult during the night than the day. Night movements are allocated double the time of day movements.

Opportunity

Reduce the time needed to complete ground movements at night.

Solution

Send haptic directional cues to ROTC cadets through vibration motors imbedded in a hat.

Lesson Learned

Don't take evaluation methods for granted. These will make or break you.

My Role: Client & User Relations, Research, Some Prototyping |Team: Sahib Singh, Amit Garg, John Crisp, Bryan Bennett

PROCESS

Our team followed a four phase user-centered design approach to discover our environment, define our user’s problem, then develop solutions, and deliver them through iterative testing along the way.

Discover > Define > Develop > Deliver

DISCOVER | Ethnography + Interviews + Secondary Research

Our ethnographic research began by joining the ROTC cadets on a low-light ruck march up Kennesaw Mountain at 4 am one morning. During the exercise, we were able to experience with cadets, what it is like to stumble up a mountain, through rocks and brush in almost complete darkness. Then, following the end of the march, we had the opportunity to speak freely with cadets in impromptu, unstructured interviews.

Admittedly, this experience was not completely accurate to the real-life scenario of undercover ground movements in that the march was a training exercise, glow sticks were used for safety precautions, and voice commands were allowed to help new team members acclimate to their first march. Therefore, we later supplemented our research through semi-structured interviews with both cadets and training officers alike (conducting 7 formally scheduled interviews and several impromptu overall).

In parallel to user research, we began secondary research and a literature review to understand what the current landscape of research in the ROTC and Military community looked like.

Discover > Define > Develop > Deliver

DEFINE | Affinity Maps + Communication Models + Constraints + Problem Identification

After the march and every few interviews we organically flowed into the Define Phase, meeting as a team to debrief and share our experiences and findings from the march, interviews and secondary research. We used affinity mapping to collect thoughts and start exploring trends that were emerging. These sessions prompted more questions by our team that we used to direct further interviews with our population.

These further interview sessions helped us nail down needs, wants, and constraints. For example, identifying needs expressed by commanding officers for solutions that could exist in a real-world army environment following graduation or needs expressed by cadets for low-weight because their ruck sacks were already pushing 40 pounds. In these sessions, we further established chain of command relationships and how those impact communication flow models between cadets.

Through the define phase we identified our problem space and built our opportunity statement.

Moving from point A to B is more difficult and takes longer to complete during the night than the day. There is opportunity to help cadets reduce travel time at night.

Discover > Define > Develop > Deliver

DEVELOP | Brainstorm + Concept Design + Feedback + Participatory Design + Prototype

The Development phase consisted of several brainstorming sessions, where we used a collaborative “Yes and…” culture to rapidly form dozens of ideas. Later, we would take time to flesh out the ideas a bit further, before evaluating them based on feasibility, and if they truly fit the constraints we had identified earlier. Ultimately, we were left with three viable ideas and hammered these out completely into different concepts: a bread crumb device much like the Hansel and Gretel fairy tale, pressure sensitive sleeves worn by all cadets that communicated based on gestures, and an accelerometer glove that could read and translate hand and arm signals to a haptic vest worn by all cadets.

We presented these designs to our peers, receiving third-party, unbiased feedback on our methods. Their criticism reassured us that our methods were truly user-centered as well as helped us identify other technologies that could help support our solutions. However, their feedback could not move us in the best direction for our intended population. So, we presented our ideas to the Commanding Officers. Because their feedback found good and bad aspects in each design, we held a participatory design session with them later to design a solution that had better buy-in and feasibility from all.

This design session led to our final design: a hat with vibration motors worn by all cadets that could receive directional commands and translate them into vibration cues. For example, the cue for moving forward was a vibration at the from of the head, while left and right commands resulted in vibration respectively on the left and right sides of the head.

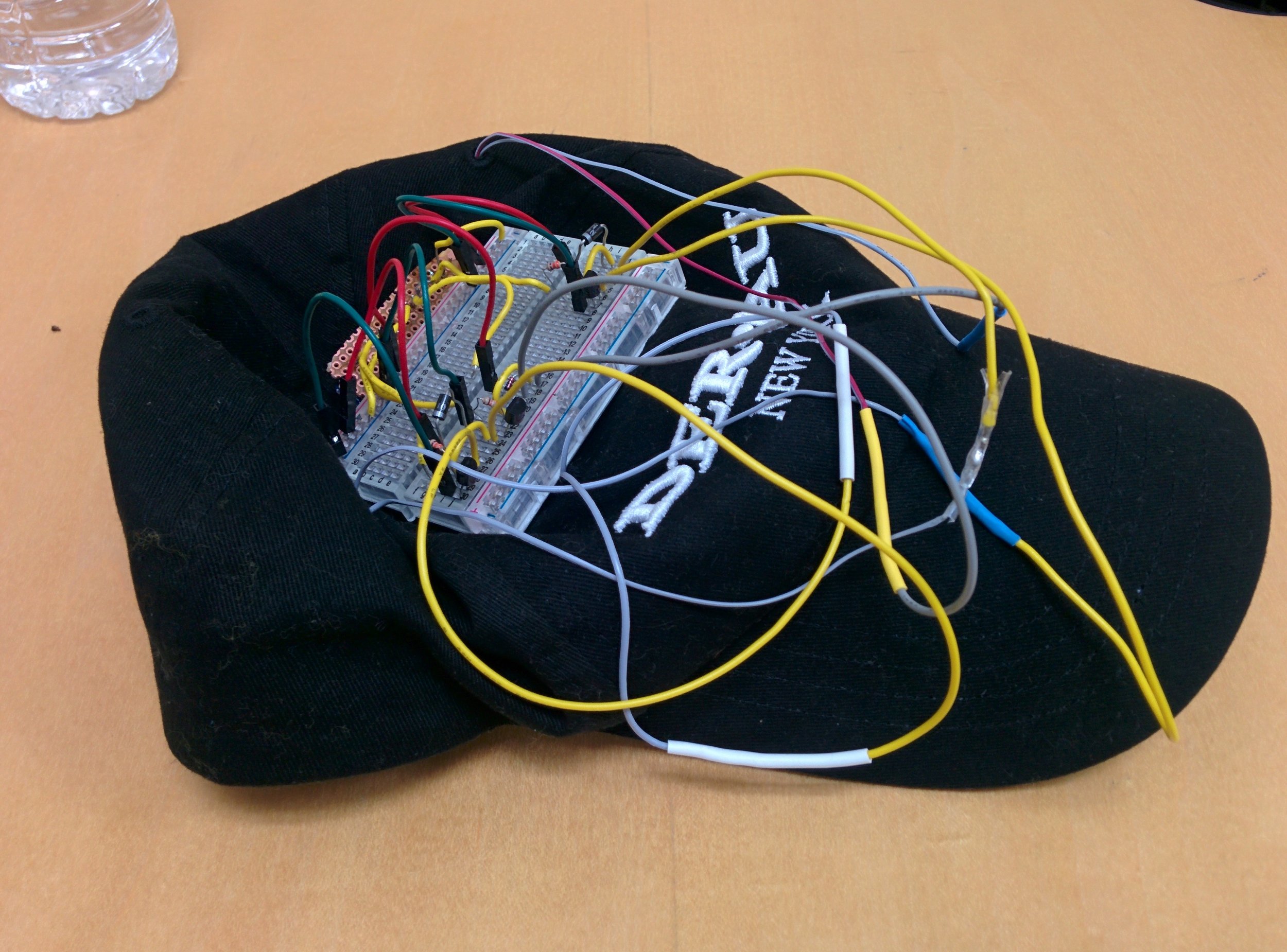

We prototyped the device using the Adafruit bluefruit microprocessor that has a baked in mobile app. By rewriting code intended for LED outputs we quickly hooked up vibration motor outputs and mapped those to directional arrows in the app.

Ultimately, our final solution was an entire communication system complete with transmitters and receivers. We chose to only prototype the receiver (vibration hat) though, because this aspect of the designed system would impact navigation time greatly and inform future iterations of the entire system.

Discover > Define > Develop > Deliver

DELIVER | Heuristic Evaluation + User Testing

Following prototyping, we entered our final phase of the project: Delivery. This was the heuristic evaluation & user-testing phase.

It’s important to note that during discovery and development, we were submitting paperwork to the IRB for ethical approval for this study. Because the ROTC program falls under the Department of Defense, there are special government clearances we needed to get approved. At the time of testing, we had not yet gathered that approval. In anticipation of this challenge, we submitted a second study that used generic college students as our intended population and pivoted the device towards one for a hiking-in-the-dark game-like scenario.

While waiting for ethics board approval, we performed a heuristic evaluation with four experts so that we could evaluate the usability of our prototyped hat. We reviewed established wearable device heuristics and realized that the published information was either overly general or too specific to a particular kind of wearable. So, we pieced together our own, focusing on detectability, distinguishability, learnability, wearability, and efficiency. Our experts found that the device wasn't uncomfortable and that you could indeed feel the vibration motors and interpret their correct directional meaning. However, a major challenge in the heuristic evaluation was helping our experts get in the mindset of our target group. Our experts didn’t know the nuances and learned behaviors of a highly trained ROTC group; they could only imagine them. This led to poor scores in wearability and efficiency, however the received critique was documented for further consideration when higher fidelity prototypes could be developed. For immediate changes, we worked on improving code to reduce the delay between giving a directional command and when the hat received the command.

Gaining approval for the generic hiking in the dark device, we proceeded with a first pass user-test of the hat with 6 participants through an obstacle course followed with an exit-survey. We used a within-subjects experiment, timing each participant through the course as they navigated under three different navigational cue conditions – line-of-sight hand and arm signals, voice directions using bonephones, and haptic signals using our prototyped vibration hat. In order to reduce bias from learning curves, the conditions were randomly assigned, meaning one participant might start with verbal directions while another would start with haptic signals.

From a quantitative perspective, haptic vibrations performed faster movement times than voice directions or hand and arm signals. This was expected - hand and arm signals performed the poorest at mean times of over 80 secs. Needling out the difference between haptic and voice cues from a quantitative perspective is more difficult, because the design of the test was flawed. We presumed to think a human could visually catch the time difference between sending a directional cue and the subject acting on that cue. Overall, completion times from start to finish suggest that the haptic signals resulted in faster completion times than voice cues, but again that conclusion is not firm due to the potential for human error in timing. In addition, the results are not statistically significant due to small sample size and ultimately were not performed with our intended target population.

However, from a qualitative perspective the exit surveys suggest that subjects found the vibration hat easier and more intuitive to understand than the verbal directions because the left and right directions were already mapped to the left and right sides of the head, eliminating the time needed to process the command. Vibration cues could be acted on instantly, while verbal cues needed to be translated from a single word, to a meaning, and then to an action. This feedback suggested that the research is on the right track and that further revisions of the evaluation method and using the intended population could provide stronger support for moving forward with the entire communication system.

LOOKING FORWARD & LESSONS LEARNED

Our semester ended before approval to test with ROTC cadets could be gathered. We believe there is merit for moving forward, but as of now do not intend to pursue this direction.

Overall, though, this project challenged us in many aspects from paperwork and red-tape headaches to mind exercises for how to explore and design for new and rapidly changing technologies in ambiguous landscapes.

The lesson I take to heart is that evaluation is just as important as discovery. I've been an avid champion of user research for informing design (after all it's my favorite part - curiosity & talking to people). The culmination of our work though is hard to support, due to a flawed evaluation design. I don't mean to suggest that we didn't plan this part, because we did - building scenarios and steps, asking nearby classmates to be our guinea pigs, and revising the plan - but we didn't think to explore the constraints of the technology used to actually measure evaluations! In hindsight, I'll take that less for granted and be sure to consider those angles in the future.

For more details on the project and our delivered reports visit: http://teamhittheroadjack.weebly.com/